diff --git a/apps/app/components/core/board-view/single-board.tsx b/apps/app/components/core/board-view/single-board.tsx

index 76226f0cf..16fe0e887 100644

--- a/apps/app/components/core/board-view/single-board.tsx

+++ b/apps/app/components/core/board-view/single-board.tsx

@@ -1,4 +1,4 @@

-import { useState, useEffect } from "react";

+import { useState } from "react";

import { useRouter } from "next/router";

@@ -60,51 +60,46 @@ export const SingleBoard: React.FC = ({

const isNotAllowed = userAuth.isGuest || userAuth.isViewer || isCompleted;

- useEffect(() => {

- if (currentState?.group === "completed" || currentState?.group === "cancelled")

- setIsCollapsed(false);

- }, [currentState]);

-

return (

-

-

-

- {isCollapsed && (

-

- {(provided, snapshot) => (

-

- {orderBy !== "sort_order" && (

- <>

-

-

- This board is ordered by{" "}

- {replaceUnderscoreIfSnakeCase(

- orderBy ? (orderBy[0] === "-" ? orderBy.slice(1) : orderBy) : "created_at"

- )}

-

-

- )}

+

+

+ {isCollapsed && (

+

+ {(provided, snapshot) => (

+

+ {orderBy !== "sort_order" && (

+ <>

+

+

+ This board is ordered by{" "}

+ {replaceUnderscoreIfSnakeCase(

+ orderBy ? (orderBy[0] === "-" ? orderBy.slice(1) : orderBy) : "created_at"

+ )}

+

+

+ )}

+

{groupedByIssues?.[groupTitle].map((issue, index) => (

= ({

>

{provided.placeholder}

+

+

{type === "issue" ? (

}

- optionsPosition="left"

+ position="left"

noBorder

>

@@ -182,10 +179,10 @@ export const SingleBoard: React.FC = ({

)

)}

- )}

-

- )}

-

+ )}

+

+ )}

{!isNotAllowed && (

-

+

{type && !isNotAllowed && (

-

+ setIsMenuActive(!isMenuActive)}

+ >

+

+

+ }

+ >

@@ -348,11 +360,6 @@ export const SingleBoardIssue: React.FC

= ({

isNotAllowed={isNotAllowed}

/>

)}

- {properties.sub_issue_count && (

-

- {issue.sub_issues_count} {issue.sub_issues_count === 1 ? "sub-issue" : "sub-issues"}

-

- )}

{properties.labels && issue.label_details.length > 0 && (

{issue.label_details.map((label) => (

@@ -388,6 +395,16 @@ export const SingleBoardIssue: React.FC

= ({

selfPositioned

/>

)}

+ {properties.sub_issue_count && (

+

+

+

+

+ {issue.sub_issues_count}

+

+

+

diff --git a/apps/app/components/core/bulk-delete-issues-modal.tsx b/apps/app/components/core/bulk-delete-issues-modal.tsx

index bd5e18e57..7fe2181f6 100644

--- a/apps/app/components/core/bulk-delete-issues-modal.tsx

+++ b/apps/app/components/core/bulk-delete-issues-modal.tsx

@@ -121,7 +121,7 @@ export const BulkDeleteIssuesModal: React.FC = ({ isOpen, setIsOpen }) =>

leaveFrom="opacity-100 scale-100"

leaveTo="opacity-0 scale-95"

>

-

+

+ );

+};

+

+export default CalendarHeader;

diff --git a/apps/app/components/core/calendar-view/calendar.tsx b/apps/app/components/core/calendar-view/calendar.tsx

index 9dfdac64e..ce453f898 100644

--- a/apps/app/components/core/calendar-view/calendar.tsx

+++ b/apps/app/components/core/calendar-view/calendar.tsx

@@ -1,9 +1,20 @@

-import React, { useState } from "react";

-import useSWR, { mutate } from "swr";

-import Link from "next/link";

+import React, { useEffect, useState } from "react";

+

import { useRouter } from "next/router";

-// helper

+import { mutate } from "swr";

+

+// react-beautiful-dnd

+import { DragDropContext, DropResult } from "react-beautiful-dnd";

+// services

+import issuesService from "services/issues.service";

+// hooks

+import useCalendarIssuesView from "hooks/use-calendar-issues-view";

+// components

+import { SingleCalendarDate, CalendarHeader } from "components/core";

+// ui

+import { Spinner } from "components/ui";

+// helpers

import { renderDateFormat } from "helpers/date-time.helper";

import {

startOfWeek,

@@ -11,156 +22,61 @@ import {

eachDayOfInterval,

weekDayInterval,

formatDate,

- getCurrentWeekStartDate,

- getCurrentWeekEndDate,

- subtractMonths,

- addMonths,

- updateDateWithYear,

- updateDateWithMonth,

- isSameMonth,

- isSameYear,

- subtract7DaysToDate,

- addSevenDaysToDate,

} from "helpers/calendar.helper";

-// ui

-import { Popover, Transition } from "@headlessui/react";

-import { DragDropContext, Draggable, DropResult } from "react-beautiful-dnd";

-import StrictModeDroppable from "components/dnd/StrictModeDroppable";

-import { CustomMenu, Spinner, ToggleSwitch } from "components/ui";

-// icon

+// types

+import { ICalendarRange, IIssue, UserAuth } from "types";

+// fetch-keys

import {

- CheckIcon,

- ChevronDownIcon,

- ChevronLeftIcon,

- ChevronRightIcon,

- PlusIcon,

-} from "@heroicons/react/24/outline";

-// hooks

-import useIssuesView from "hooks/use-issues-view";

-// services

-import issuesService from "services/issues.service";

-import cyclesService from "services/cycles.service";

-// fetch key

-import {

- CYCLE_CALENDAR_ISSUES,

- MODULE_CALENDAR_ISSUES,

- PROJECT_CALENDAR_ISSUES,

+ CYCLE_ISSUES_WITH_PARAMS,

+ MODULE_ISSUES_WITH_PARAMS,

+ PROJECT_ISSUES_LIST_WITH_PARAMS,

+ VIEW_ISSUES,

} from "constants/fetch-keys";

-// type

-import { IIssue } from "types";

-// constant

-import { monthOptions, yearOptions } from "constants/calendar";

-import modulesService from "services/modules.service";

-import { getStateGroupIcon } from "components/icons";

type Props = {

+ handleEditIssue: (issue: IIssue) => void;

+ handleDeleteIssue: (issue: IIssue) => void;

addIssueToDate: (date: string) => void;

+ isCompleted: boolean;

+ userAuth: UserAuth;

};

-interface ICalendarRange {

- startDate: Date;

- endDate: Date;

-}

-

-export const CalendarView: React.FC = ({ addIssueToDate }) => {

+export const CalendarView: React.FC = ({

+ handleEditIssue,

+ handleDeleteIssue,

+ addIssueToDate,

+ isCompleted = false,

+ userAuth,

+}) => {

const [showWeekEnds, setShowWeekEnds] = useState(false);

const [currentDate, setCurrentDate] = useState(new Date());

const [isMonthlyView, setIsMonthlyView] = useState(true);

- const [showAllIssues, setShowAllIssues] = useState(false);

- const router = useRouter();

- const { workspaceSlug, projectId, cycleId, moduleId } = router.query;

-

- const { params } = useIssuesView();

-

- const [calendarDateRange, setCalendarDateRange] = useState({

+ const [calendarDates, setCalendarDates] = useState({

startDate: startOfWeek(currentDate),

endDate: lastDayOfWeek(currentDate),

});

- const targetDateFilter = {

- target_date: `${renderDateFormat(calendarDateRange.startDate)};after,${renderDateFormat(

- calendarDateRange.endDate

- )};before`,

- };

+ const router = useRouter();

+ const { workspaceSlug, projectId, cycleId, moduleId, viewId } = router.query;

- const { data: projectCalendarIssues } = useSWR(

- workspaceSlug && projectId ? PROJECT_CALENDAR_ISSUES(projectId as string) : null,

- workspaceSlug && projectId

- ? () =>

- issuesService.getIssuesWithParams(workspaceSlug as string, projectId as string, {

- ...params,

- target_date: `${renderDateFormat(calendarDateRange.startDate)};after,${renderDateFormat(

- calendarDateRange.endDate

- )};before`,

- group_by: null,

- })

- : null

- );

-

- const { data: cycleCalendarIssues } = useSWR(

- workspaceSlug && projectId && cycleId

- ? CYCLE_CALENDAR_ISSUES(projectId as string, cycleId as string)

- : null,

- workspaceSlug && projectId && cycleId

- ? () =>

- cyclesService.getCycleIssuesWithParams(

- workspaceSlug as string,

- projectId as string,

- cycleId as string,

- {

- ...params,

- target_date: `${renderDateFormat(

- calendarDateRange.startDate

- )};after,${renderDateFormat(calendarDateRange.endDate)};before`,

- group_by: null,

- }

- )

- : null

- );

-

- const { data: moduleCalendarIssues } = useSWR(

- workspaceSlug && projectId && moduleId

- ? MODULE_CALENDAR_ISSUES(projectId as string, moduleId as string)

- : null,

- workspaceSlug && projectId && moduleId

- ? () =>

- modulesService.getModuleIssuesWithParams(

- workspaceSlug as string,

- projectId as string,

- moduleId as string,

- {

- ...params,

- target_date: `${renderDateFormat(

- calendarDateRange.startDate

- )};after,${renderDateFormat(calendarDateRange.endDate)};before`,

- group_by: null,

- }

- )

- : null

- );

+ const { calendarIssues, params, setCalendarDateRange } = useCalendarIssuesView();

const totalDate = eachDayOfInterval({

- start: calendarDateRange.startDate,

- end: calendarDateRange.endDate,

+ start: calendarDates.startDate,

+ end: calendarDates.endDate,

});

const onlyWeekDays = weekDayInterval({

- start: calendarDateRange.startDate,

- end: calendarDateRange.endDate,

+ start: calendarDates.startDate,

+ end: calendarDates.endDate,

});

const currentViewDays = showWeekEnds ? totalDate : onlyWeekDays;

- const calendarIssues = cycleId

- ? (cycleCalendarIssues as IIssue[])

- : moduleId

- ? (moduleCalendarIssues as IIssue[])

- : (projectCalendarIssues as IIssue[]);

-

const currentViewDaysData = currentViewDays.map((date: Date) => {

const filterIssue =

- calendarIssues && calendarIssues.length > 0

+ calendarIssues.length > 0

? calendarIssues.filter(

(issue) =>

issue.target_date && renderDateFormat(issue.target_date) === renderDateFormat(date)

@@ -191,13 +107,16 @@ export const CalendarView: React.FC = ({ addIssueToDate }) => {

const { source, destination, draggableId } = result;

if (!destination || !workspaceSlug || !projectId) return;

+

if (source.droppableId === destination.droppableId) return;

const fetchKey = cycleId

- ? CYCLE_CALENDAR_ISSUES(projectId as string, cycleId as string)

+ ? CYCLE_ISSUES_WITH_PARAMS(cycleId.toString(), params)

: moduleId

- ? MODULE_CALENDAR_ISSUES(projectId as string, moduleId as string)

- : PROJECT_CALENDAR_ISSUES(projectId as string);

+ ? MODULE_ISSUES_WITH_PARAMS(moduleId.toString(), params)

+ : viewId

+ ? VIEW_ISSUES(viewId.toString(), params)

+ : PROJECT_ISSUES_LIST_WITH_PARAMS(projectId.toString(), params);

mutate(

fetchKey,

@@ -208,314 +127,105 @@ export const CalendarView: React.FC = ({ addIssueToDate }) => {

...p,

target_date: destination.droppableId,

};

+

return p;

}),

false

);

- issuesService.patchIssue(workspaceSlug as string, projectId as string, draggableId, {

- target_date: destination?.droppableId,

- });

+ issuesService

+ .patchIssue(workspaceSlug as string, projectId as string, draggableId, {

+ target_date: destination?.droppableId,

+ })

+ .then(() => mutate(fetchKey));

};

- const updateDate = (date: Date) => {

- setCurrentDate(date);

-

- setCalendarDateRange({

- startDate: startOfWeek(date),

- endDate: lastDayOfWeek(date),

+ const changeDateRange = (startDate: Date, endDate: Date) => {

+ setCalendarDates({

+ startDate,

+ endDate,

});

+

+ setCalendarDateRange(

+ `${renderDateFormat(startDate)};after,${renderDateFormat(endDate)};before`

+ );

};

+ useEffect(() => {

+ setCalendarDateRange(

+ `${renderDateFormat(startOfWeek(currentDate))};after,${renderDateFormat(

+ lastDayOfWeek(currentDate)

+ )};before`

+ );

+ }, [currentDate]);

+

+ const isNotAllowed = userAuth.isGuest || userAuth.isViewer || isCompleted;

+

return calendarIssues ? (

-

-

-

-

-

- {({ open }) => (

- <>

-

-

- {formatDate(currentDate, "Month")}{" "}

- {formatDate(currentDate, "yyyy")}

-

-

+

+

+

+

-

-

-

- {yearOptions.map((year) => (

-

- ))}

-

-

- {monthOptions.map((month) => (

-

- ))}

-

-

-

-

- )}

-

-

-

-

-

-

-

-

-

-

- {isMonthlyView ? "Monthly" : "Weekly"}

-

-

- Monthly View

-

-

-

- {

- setIsMonthlyView(false);

- setCalendarDateRange({

- startDate: getCurrentWeekStartDate(currentDate),

- endDate: getCurrentWeekEndDate(currentDate),

- });

- }}

- className="w-52 text-sm text-brand-secondary"

- >

-

- Weekly View

-

-

-

-

-

Show weekends

- setShowWeekEnds(!showWeekEnds)}

- />

-

-

-

-

-

- {weeks.map((date, index) => (

-

-

- {isMonthlyView ? formatDate(date, "eee").substring(0, 3) : formatDate(date, "eee")}

-

- {!isMonthlyView && {formatDate(date, "d")}}

-

- ))}

-

-

-

- {currentViewDaysData.map((date, index) => {

- const totalIssues = date.issues.length;

-

- return (

-

- {(provided) => (

-

+ {weeks.map((date, index) => (

+

- {isMonthlyView &&

{formatDate(new Date(date.date), "d")}}

- {totalIssues > 0 &&

- date.issues

- .slice(0, showAllIssues ? totalIssues : 4)

- .map((issue: IIssue, index) => (

-

- {(provided, snapshot) => (

-

- )}

-

- ))}

- {totalIssues > 4 && (

-

- )}

-

-

-

- {provided.placeholder}

-

- )}

-

- );

- })}

+ : (index + 1) % 5 === 0

+ ? ""

+ : "border-r"

+ : ""

+ }`}

+ >

+

+ {isMonthlyView

+ ? formatDate(date, "eee").substring(0, 3)

+ : formatDate(date, "eee")}

+

+ {!isMonthlyView &&

{formatDate(date, "d")}}

+

+

+

+ {currentViewDaysData.map((date, index) => (

+

+ ))}

+

-

diff --git a/apps/app/components/core/calendar-view/index.ts b/apps/app/components/core/calendar-view/index.ts

index 55608c7e8..625ff1fb4 100644

--- a/apps/app/components/core/calendar-view/index.ts

+++ b/apps/app/components/core/calendar-view/index.ts

@@ -1 +1,4 @@

-export * from "./calendar"

\ No newline at end of file

+export * from "./calendar-header";

+export * from "./calendar";

+export * from "./single-date";

+export * from "./single-issue";

diff --git a/apps/app/components/core/calendar-view/single-date.tsx b/apps/app/components/core/calendar-view/single-date.tsx

new file mode 100644

index 000000000..fd552188c

--- /dev/null

+++ b/apps/app/components/core/calendar-view/single-date.tsx

@@ -0,0 +1,107 @@

+import React, { useState } from "react";

+

+// react-beautiful-dnd

+import { Draggable } from "react-beautiful-dnd";

+// component

+import StrictModeDroppable from "components/dnd/StrictModeDroppable";

+import { SingleCalendarIssue } from "./single-issue";

+// icons

+import { PlusSmallIcon } from "@heroicons/react/24/outline";

+// helper

+import { formatDate } from "helpers/calendar.helper";

+// types

+import { IIssue } from "types";

+

+type Props = {

+ handleEditIssue: (issue: IIssue) => void;

+ handleDeleteIssue: (issue: IIssue) => void;

+ index: number;

+ date: {

+ date: string;

+ issues: IIssue[];

+ };

+ addIssueToDate: (date: string) => void;

+ isMonthlyView: boolean;

+ showWeekEnds: boolean;

+ isNotAllowed: boolean;

+};

+

+export const SingleCalendarDate: React.FC

= ({

+ handleEditIssue,

+ handleDeleteIssue,

+ date,

+ index,

+ addIssueToDate,

+ isMonthlyView,

+ showWeekEnds,

+ isNotAllowed,

+}) => {

+ const [showAllIssues, setShowAllIssues] = useState(false);

+

+ const totalIssues = date.issues.length;

+

+ return (

+

+ {(provided) => (

+

+ {isMonthlyView &&

{formatDate(new Date(date.date), "d")}}

+ {totalIssues > 0 &&

+ date.issues.slice(0, showAllIssues ? totalIssues : 4).map((issue: IIssue, index) => (

+

+ {(provided, snapshot) => (

+

+ )}

+

+ ))}

+ {totalIssues > 4 && (

+

+ )}

+

+

+

+

+

+ {provided.placeholder}

+

+

+ {!isNotAllowed && (

+

+

+ handleEditIssue(issue)}>

+

+

+ handleDeleteIssue(issue)}>

+

+

+ Delete issue

+

+

+

+

+

+ Copy issue Link

+

+

+

+

+ )}

+

+

+ {properties.key && (

+

+

+ {issue.project_detail?.identifier}-{issue.sequence_id}

+

+

+ )}

+

+ {truncateText(issue.name, 25)}

+

+

+

+ {displayProperties && (

+

+ {properties.priority && (

+

+ )}

+ {properties.state && (

+

+ )}

+

+ {properties.due_date && (

+

+ )}

+ {properties.labels && issue.label_details.length > 0 ? (

+

+ {issue.label_details.map((label) => (

+

+

+ {label.name}

+

+ ))}

+

+ ) : (

+ ""

+ )}

+ {properties.assignee && (

+

+ )}

+ {properties.estimate && (

+

+ )}

+ {properties.sub_issue_count && (

+

+

+

+

+ {issue.sub_issues_count}

+

+

+

+ )}

+ {properties.link && (

+

+

+

+

+ {issue.link_count}

+

+

+

+ )}

+ {properties.attachment_count && (

+

+

+

+

+ {issue.attachment_count}

+

+

+ )}

+

+ )}

+

+

+

+

+

+ {({ open }) => (

+ <>

+

+ {watch(name) && watch(name) !== "" ? (

+

+ ) : (

+

+ )}

+

+

+

+

+

+

+

+

+ )}

+

+

+

${content}

`);

+ }, [htmlContent, editorRef, content]);

+

return (

- {((content && content !== "") || htmlContent !== "

") && (

+ {((content && content !== "") || (htmlContent && htmlContent !== "

")) && (

Content:

-

{content}}

customClassName="-m-3"

noBorder

borderOnFocus={false}

editable={false}

+ ref={editorRef}

/>

)}

diff --git a/apps/app/components/core/image-upload-modal.tsx b/apps/app/components/core/image-upload-modal.tsx

index ba41f3efa..2ede6e8d7 100644

--- a/apps/app/components/core/image-upload-modal.tsx

+++ b/apps/app/components/core/image-upload-modal.tsx

@@ -110,7 +110,7 @@ export const ImageUploadModal: React.FC

= ({

leaveFrom="opacity-100"

leaveTo="opacity-0"

>

-

+

@@ -124,7 +124,7 @@ export const ImageUploadModal: React.FC

= ({

leaveFrom="opacity-100 translate-y-0 sm:scale-100"

leaveTo="opacity-0 translate-y-4 sm:translate-y-0 sm:scale-95"

>

-

+

Upload Image

@@ -133,9 +133,9 @@ export const ImageUploadModal: React.FC = ({

@@ -143,7 +143,7 @@ export const ImageUploadModal: React.FC

= ({

<>

@@ -152,17 +152,18 @@ export const ImageUploadModal: React.FC = ({

objectFit="cover"

src={image ? URL.createObjectURL(image) : value ? value : ""}

alt="image"

+ className="rounded-lg"

/>

) : (

- <>

-

-

+

+

+

{isDragActive

? "Drop image here to upload"

: "Drag & drop image here"}

-

+

)}

diff --git a/apps/app/components/core/index.ts b/apps/app/components/core/index.ts

index eb547578c..e3e187d60 100644

--- a/apps/app/components/core/index.ts

+++ b/apps/app/components/core/index.ts

@@ -1,14 +1,18 @@

export * from "./board-view";

+export * from "./calendar-view";

+export * from "./gantt-chart-view";

export * from "./list-view";

export * from "./sidebar";

export * from "./bulk-delete-issues-modal";

export * from "./existing-issues-list-modal";

+export * from "./filters-list";

export * from "./gpt-assistant-modal";

export * from "./image-upload-modal";

export * from "./issues-view-filter";

export * from "./issues-view";

export * from "./link-modal";

export * from "./image-picker-popover";

-export * from "./filter-list";

export * from "./feeds";

export * from "./theme-switch";

+export * from "./custom-theme-selector";

+export * from "./color-picker-input";

diff --git a/apps/app/components/core/issues-view-filter.tsx b/apps/app/components/core/issues-view-filter.tsx

index 6868cf7b0..6856e8f8b 100644

--- a/apps/app/components/core/issues-view-filter.tsx

+++ b/apps/app/components/core/issues-view-filter.tsx

@@ -17,6 +17,7 @@ import {

ListBulletIcon,

Squares2X2Icon,

CalendarDaysIcon,

+ ChartBarIcon,

} from "@heroicons/react/24/outline";

// helpers

import { replaceUnderscoreIfSnakeCase } from "helpers/string.helper";

@@ -82,6 +83,17 @@ export const IssuesFilterView: React.FC = () => {

>

+

- {issueView !== "calendar" && (

-

-

Display Properties

-

- {Object.keys(properties).map((key) => {

- if (key === "estimate" && !isEstimateActive) return null;

- return (

-

- );

- })}

-

+

+

Display Properties

+

+ {Object.keys(properties).map((key) => {

+ if (key === "estimate" && !isEstimateActive) return null;

+

+ return (

+

+ );

+ })}

- )}

+

- {areFiltersApplied && (

-

{

- if (viewId) {

- setFilters({}, true);

- setToastAlert({

- title: "View updated",

- message: "Your view has been updated",

- type: "success",

- });

- } else

- setCreateViewModal({

- query: filters,

- });

- }}

- className="flex items-center gap-2 text-sm"

- >

- {!viewId && }

- {viewId ? "Update" : "Save"} view

-

- )}

+

{

+ if (viewId) {

+ setFilters({}, true);

+ setToastAlert({

+ title: "View updated",

+ message: "Your view has been updated",

+ type: "success",

+ });

+ } else

+ setCreateViewModal({

+ query: filters,

+ });

+ }}

+ className="flex items-center gap-2 text-sm"

+ >

+ {!viewId && }

+ {viewId ? "Update" : "Save"} view

+

{}

@@ -478,14 +476,14 @@ export const IssuesView: React.FC = ({

- Drop issue here to delete

+ Drop here to delete the issue.

)}

@@ -532,8 +530,16 @@ export const IssuesView: React.FC = ({

isCompleted={isCompleted}

userAuth={memberRole}

/>

+ ) : issueView === "calendar" ? (

+

) : (

-

+ issueView === "gantt_chart" &&

)}

) : type === "issue" ? (

diff --git a/apps/app/components/core/list-view/single-issue.tsx b/apps/app/components/core/list-view/single-issue.tsx

index dbcb87451..6f6a5ab4f 100644

--- a/apps/app/components/core/list-view/single-issue.tsx

+++ b/apps/app/components/core/list-view/single-issue.tsx

@@ -31,6 +31,7 @@ import {

ArrowTopRightOnSquareIcon,

PaperClipIcon,

} from "@heroicons/react/24/outline";

+import { LayerDiagonalIcon } from "components/icons";

// helpers

import { copyTextToClipboard, truncateText } from "helpers/string.helper";

import { handleIssuesMutation } from "constants/issue";

@@ -84,7 +85,7 @@ export const SingleListIssue: React.FC = ({

const { groupByProperty: selectedGroup, orderBy, params } = useIssueView();

const partialUpdateIssue = useCallback(

- (formData: Partial) => {

+ (formData: Partial, issueId: string) => {

if (!workspaceSlug || !projectId) return;

if (cycleId)

@@ -140,7 +141,7 @@ export const SingleListIssue: React.FC = ({

);

issuesService

- .patchIssue(workspaceSlug as string, projectId as string, issue.id, formData)

+ .patchIssue(workspaceSlug as string, projectId as string, issueId, formData)

.then(() => {

if (cycleId) {

mutate(CYCLE_ISSUES_WITH_PARAMS(cycleId as string, params));

@@ -151,18 +152,7 @@ export const SingleListIssue: React.FC = ({

} else mutate(PROJECT_ISSUES_LIST_WITH_PARAMS(projectId as string, params));

});

},

- [

- workspaceSlug,

- projectId,

- cycleId,

- moduleId,

- issue,

- groupTitle,

- index,

- selectedGroup,

- orderBy,

- params,

- ]

+ [workspaceSlug, projectId, cycleId, moduleId, groupTitle, index, selectedGroup, orderBy, params]

);

const handleCopyText = () => {

@@ -216,7 +206,7 @@ export const SingleListIssue: React.FC = ({

{

e.preventDefault();

setContextMenu(true);

@@ -224,7 +214,7 @@ export const SingleListIssue: React.FC

= ({

}}

>

-

+

-

+

{properties.priority && (

= ({

isNotAllowed={isNotAllowed}

/>

)}

- {properties.sub_issue_count && (

-

- {issue.sub_issues_count} {issue.sub_issues_count === 1 ? "sub-issue" : "sub-issues"}

-

- )}

{properties.labels && issue.label_details.length > 0 ? (

{issue.label_details.map((label) => (

@@ -310,6 +295,16 @@ export const SingleListIssue: React.FC

= ({

isNotAllowed={isNotAllowed}

/>

)}

+ {properties.sub_issue_count && (

+

+

+

+

+ {issue.sub_issues_count}

+

+

+

diff --git a/apps/app/components/core/list-view/single-list.tsx b/apps/app/components/core/list-view/single-list.tsx

index abe82ed73..dd5ffb110 100644

--- a/apps/app/components/core/list-view/single-list.tsx

+++ b/apps/app/components/core/list-view/single-list.tsx

@@ -168,7 +168,7 @@ export const SingleList: React.FC = ({

}

- optionsPosition="right"

+ position="right"

noBorder

>

Create new

@@ -204,7 +204,8 @@ export const SingleList: React.FC = ({

makeIssueCopy={() => makeIssueCopy(issue)}

handleDeleteIssue={handleDeleteIssue}

removeIssue={() => {

- if (removeIssue !== null && issue.bridge_id) removeIssue(issue.bridge_id, issue.id);

+ if (removeIssue !== null && issue.bridge_id)

+ removeIssue(issue.bridge_id, issue.id);

}}

isCompleted={isCompleted}

userAuth={userAuth}

diff --git a/apps/app/components/core/sidebar/progress-chart.tsx b/apps/app/components/core/sidebar/progress-chart.tsx

index f8454650e..9006b58fc 100644

--- a/apps/app/components/core/sidebar/progress-chart.tsx

+++ b/apps/app/components/core/sidebar/progress-chart.tsx

@@ -12,9 +12,11 @@ type Props = {

issues: IIssue[];

start: string;

end: string;

+ width?: number;

+ height?: number;

};

-const ProgressChart: React.FC = ({ issues, start, end }) => {

+const ProgressChart: React.FC = ({ issues, start, end, width = 360, height = 160 }) => {

const startDate = new Date(start);

const endDate = new Date(end);

const getChartData = () => {

@@ -51,8 +53,8 @@ const ProgressChart: React.FC = ({ issues, start, end }) => {

return (

= ({

issues,

module,

userAuth,

+ roundedTab,

+ noBackground,

}) => {

const router = useRouter();

const { workspaceSlug, projectId } = router.query;

@@ -100,12 +104,16 @@ export const SidebarProgressStats: React.FC = ({

>

- `w-full rounded px-3 py-1 text-brand-base ${

+ `w-full ${

+ roundedTab ? "rounded-3xl border border-brand-base" : "rounded"

+ } px-3 py-1 text-brand-base ${

selected ? " bg-brand-accent text-white" : " hover:bg-brand-surface-2"

}`

}

@@ -114,7 +122,9 @@ export const SidebarProgressStats: React.FC = ({

- `w-full rounded px-3 py-1 text-brand-base ${

+ `w-full ${

+ roundedTab ? "rounded-3xl border border-brand-base" : "rounded"

+ } px-3 py-1 text-brand-base ${

selected ? " bg-brand-accent text-white" : " hover:bg-brand-surface-2"

}`

}

@@ -123,7 +133,9 @@ export const SidebarProgressStats: React.FC = ({

- `w-full rounded px-3 py-1 text-brand-base ${

+ `w-full ${

+ roundedTab ? "rounded-3xl border border-brand-base" : "rounded"

+ } px-3 py-1 text-brand-base ${

selected ? " bg-brand-accent text-white" : " hover:bg-brand-surface-2"

}`

}

@@ -131,10 +143,10 @@ export const SidebarProgressStats: React.FC = ({

States

-

+

{members?.map((member, index) => {

- const totalArray = issues?.filter((i) => i.assignees?.includes(member.member.id));

+ const totalArray = issues?.filter((i) => i?.assignees?.includes(member.member.id));

const completeArray = totalArray?.filter((i) => i.state_detail.group === "completed");

if (totalArray.length > 0) {

@@ -150,19 +162,19 @@ export const SidebarProgressStats: React.FC = ({

completed={completeArray.length}

total={totalArray.length}

onClick={() => {

- if (filters.assignees?.includes(member.member.id))

+ if (filters?.assignees?.includes(member.member.id))

setFilters({

- assignees: filters.assignees?.filter((a) => a !== member.member.id),

+ assignees: filters?.assignees?.filter((a) => a !== member.member.id),

});

else

setFilters({ assignees: [...(filters?.assignees ?? []), member.member.id] });

}}

- selected={filters.assignees?.includes(member.member.id)}

+ selected={filters?.assignees?.includes(member.member.id)}

/>

);

}

})}

- {issues?.filter((i) => i.assignees?.length === 0).length > 0 ? (

+ {issues?.filter((i) => i?.assignees?.length === 0).length > 0 ? (

@@ -180,10 +192,10 @@ export const SidebarProgressStats: React.FC = ({

}

completed={

issues?.filter(

- (i) => i.state_detail.group === "completed" && i.assignees?.length === 0

+ (i) => i?.state_detail.group === "completed" && i.assignees?.length === 0

).length

}

- total={issues?.filter((i) => i.assignees?.length === 0).length}

+ total={issues?.filter((i) => i?.assignees?.length === 0).length}

/>

) : (

""

@@ -191,8 +203,8 @@ export const SidebarProgressStats: React.FC = ({

{issueLabels?.map((label, index) => {

- const totalArray = issues?.filter((i) => i.labels?.includes(label.id));

- const completeArray = totalArray?.filter((i) => i.state_detail.group === "completed");

+ const totalArray = issues?.filter((i) => i?.labels?.includes(label.id));

+ const completeArray = totalArray?.filter((i) => i?.state_detail.group === "completed");

if (totalArray.length > 0) {

return (

@@ -207,7 +219,7 @@ export const SidebarProgressStats: React.FC = ({

label.color && label.color !== "" ? label.color : "#000000",

}}

/>

- {label.name}

+ {label?.name}

}

completed={completeArray.length}

@@ -215,11 +227,11 @@ export const SidebarProgressStats: React.FC = ({

onClick={() => {

if (filters.labels?.includes(label.id))

setFilters({

- labels: filters.labels?.filter((l) => l !== label.id),

+ labels: filters?.labels?.filter((l) => l !== label.id),

});

else setFilters({ labels: [...(filters?.labels ?? []), label.id] });

}}

- selected={filters.labels?.includes(label.id)}

+ selected={filters?.labels?.includes(label.id)}

/>

);

}

diff --git a/apps/app/components/core/sidebar/single-progress-stats.tsx b/apps/app/components/core/sidebar/single-progress-stats.tsx

index 3e672ce8b..d8236de9b 100644

--- a/apps/app/components/core/sidebar/single-progress-stats.tsx

+++ b/apps/app/components/core/sidebar/single-progress-stats.tsx

@@ -18,7 +18,7 @@ export const SingleProgressStats: React.FC = ({

selected = false,

}) => (

= ({

-

{Math.floor((completed / total) * 100)}%

+

+ {isNaN(Math.floor((completed / total) * 100))

+ ? "0"

+ : Math.floor((completed / total) * 100)}

+ %

+

+

+ {currentThemeObj.label}

+

+

+

+

+

+

+

+ Progress

+

+

+

+ {Object.keys(groupedIssues).map((group, index) => (

+

+

+ {group}

+

+ }

+ completed={groupedIssues[group]}

+ total={cycle.total_issues}

+ />

+ ))}

+

+

+

+

+

+

+

+

+

High Priority Issues

+

+

+ {issues

+ ?.filter((issue) => issue.priority === "urgent" || issue.priority === "high")

+ .map((issue) => (

+

+

+

+

+

+ {issue.project_detail?.identifier}-{issue.sequence_id}

+

+

+

+

+

+ {truncateText(issue.name, 30)}

+

+

+

+

+

+

+ {getPriorityIcon(issue.priority, "text-sm")}

+

+ {issue.label_details.length > 0 ? (

+

+ {issue.label_details.map((label) => (

+

+

+ {label.name}

+

+ ))}

+

+ ) : (

+ ""

+ )}

+

+ {issue.assignees &&

+ issue.assignees.length > 0 &&

+ Array.isArray(issue.assignees) ? (

+

+ ) : (

+ ""

+ )}

+

+

+

+ ))}

+

+

+

+

+

+

+ issue?.state_detail?.group === "completed" &&

+ (issue?.priority === "urgent" || issue?.priority === "high")

+ )?.length /

+ issues?.filter(

+ (issue) => issue?.priority === "urgent" || issue?.priority === "high"

+ )?.length) *

+ 100 ?? 0

+ }%`,

+ }}

+ />

+

+

+ {

+ issues?.filter(

+ (issue) =>

+ issue?.state_detail?.group === "completed" &&

+ (issue?.priority === "urgent" || issue?.priority === "high")

+ )?.length

+ }{" "}

+ of{" "}

+ {

+ issues?.filter(

+ (issue) => issue?.priority === "urgent" || issue?.priority === "high"

+ )?.length

+ }

+

+

+

+

+

+

+

+

+ Ideal

+

+

+

+ Current

+

+

+

+

+

+

+

+ Pending Issues -{" "}

+ {cycle.total_issues - (cycle.completed_issues + cycle.cancelled_issues)}

+

+

+

+

+

+

+

+

+

+ No assignee

+

+ }

+ completed={

+ issues?.filter(

+ (i) => i?.state_detail.group === "completed" && i.assignees?.length === 0

+ ).length

+ }

+ total={issues?.filter((i) => i?.assignees?.length === 0).length}

+ />

+ ) : (

+ ""

+ )}

+

+

+ {issueLabels?.map((label, index) => {

+ const totalArray = issues?.filter((i) => i?.labels?.includes(label.id));

+ const completeArray = totalArray?.filter((i) => i?.state_detail.group === "completed");

+

+ if (totalArray.length > 0) {

+ return (

+

+

+ {label?.name}

+

+ }

+ completed={completeArray.length}

+ total={totalArray.length}

+ />

+ );

+ }

+ })}

+

+

+

+ );

+};

diff --git a/apps/app/components/cycles/cycles-list.tsx b/apps/app/components/cycles/all-cycles-board.tsx

similarity index 96%

rename from apps/app/components/cycles/cycles-list.tsx

rename to apps/app/components/cycles/all-cycles-board.tsx

index f8d607dbe..259251fe6 100644

--- a/apps/app/components/cycles/cycles-list.tsx

+++ b/apps/app/components/cycles/all-cycles-board.tsx

@@ -17,7 +17,7 @@ type TCycleStatsViewProps = {

type: "current" | "upcoming" | "draft";

};

-export const CyclesList: React.FC

= ({

+export const AllCyclesBoard: React.FC = ({

cycles,

setCreateUpdateCycleModal,

setSelectedCycle,

@@ -61,7 +61,7 @@ export const CyclesList: React.FC = ({

-

No current cycle is present.

+ No cycle is present.

) : (

>;

+ setSelectedCycle: React.Dispatch>;

+ type: "current" | "upcoming" | "draft";

+};

+

+export const AllCyclesList: React.FC = ({

+ cycles,

+ setCreateUpdateCycleModal,

+ setSelectedCycle,

+ type,

+}) => {

+ const [cycleDeleteModal, setCycleDeleteModal] = useState(false);

+ const [selectedCycleForDelete, setSelectedCycleForDelete] = useState();

+

+ const handleDeleteCycle = (cycle: ICycle) => {

+ setSelectedCycleForDelete({ ...cycle, actionType: "delete" });

+ setCycleDeleteModal(true);

+ };

+

+ const handleEditCycle = (cycle: ICycle) => {

+ setSelectedCycle({ ...cycle, actionType: "edit" });

+ setCreateUpdateCycleModal(true);

+ };

+

+ return (

+ <>

+

+ {cycles ? (

+ cycles.length > 0 ? (

+

+ {cycles.map((cycle) => (

+

+

+ handleDeleteCycle(cycle)}

+ handleEditCycle={() => handleEditCycle(cycle)}

+ />

+

+

+ ))}

+

+

No cycle is present.

+

+ ) : (

+

+ )

+ ) : (

+

+

+

+ )}

+

+ );

+};

diff --git a/apps/app/components/cycles/completed-cycles-list.tsx b/apps/app/components/cycles/completed-cycles.tsx

similarity index 65%

rename from apps/app/components/cycles/completed-cycles-list.tsx

rename to apps/app/components/cycles/completed-cycles.tsx

index 6729ceeeb..36ef691f5 100644

--- a/apps/app/components/cycles/completed-cycles-list.tsx

+++ b/apps/app/components/cycles/completed-cycles.tsx

@@ -7,9 +7,9 @@ import useSWR from "swr";

// services

import cyclesService from "services/cycles.service";

// components

-import { DeleteCycleModal, SingleCycleCard } from "components/cycles";

+import { DeleteCycleModal, SingleCycleCard, SingleCycleList } from "components/cycles";

// icons

-import { CompletedCycleIcon, ExclamationIcon } from "components/icons";

+import { ExclamationIcon } from "components/icons";

// types

import { ICycle, SelectCycleType } from "types";

// fetch-keys

@@ -19,11 +19,13 @@ import { EmptyState, Loader } from "components/ui";

import emptyCycle from "public/empty-state/empty-cycle.svg";

export interface CompletedCyclesListProps {

+ cycleView: string;

setCreateUpdateCycleModal: React.Dispatch>;

setSelectedCycle: React.Dispatch>;

}

-export const CompletedCyclesList: React.FC = ({

+export const CompletedCycles: React.FC = ({

+ cycleView,

setCreateUpdateCycleModal,

setSelectedCycle,

}) => {

@@ -72,17 +74,36 @@ export const CompletedCyclesList: React.FC = ({

/>

Completed cycles are not editable.

- {completedCycles.completed_cycles.map((cycle) => (

- handleDeleteCycle(cycle)}

- handleEditCycle={() => handleEditCycle(cycle)}

- isCompleted

- />

- ))}

-

+ {cycleView === "list" && (

+

+ {completedCycles.completed_cycles.map((cycle) => (

+

+

+ handleDeleteCycle(cycle)}

+ handleEditCycle={() => handleEditCycle(cycle)}

+ isCompleted

+ />

+

+

+ ))}

+

+ {completedCycles.completed_cycles.map((cycle) => (

+ handleDeleteCycle(cycle)}

+ handleEditCycle={() => handleEditCycle(cycle)}

+ isCompleted

+ />

+ ))}

+

+ )}

) : (

= ({ cycles }) => {

+ const router = useRouter();

+ const { workspaceSlug, projectId } = router.query;

+

+ // rendering issues on gantt sidebar

+ const GanttSidebarBlockView = ({ data }: any) => (

+

+ );

+

+ // rendering issues on gantt card

+ const GanttBlockView = ({ data }: { data: ICycle }) => (

+

+

+

+

+ {data?.name}

+

+

+

+ );

+

+ // handle gantt issue start date and target date

+ const handleUpdateDates = async (data: any) => {

+ const payload = {

+ id: data?.id,

+ start_date: data?.start_date,

+ target_date: data?.target_date,

+ };

+ };

+

+ const blockFormat = (blocks: any) =>

+ blocks && blocks.length > 0

+ ? blocks.map((_block: any) => {

+ if (_block?.start_date && _block.target_date) console.log("_block", _block);

+ return {

+ start_date: new Date(_block.created_at),

+ target_date: new Date(_block.updated_at),

+ data: _block,

+ };

+ })

+ : [];

+

+ return (

+

+ }

+ blockRender={(data: any) => }

+ />

+

+ );

+};

diff --git a/apps/app/components/cycles/cycles-view.tsx b/apps/app/components/cycles/cycles-view.tsx

new file mode 100644

index 000000000..aedd82ba3

--- /dev/null

+++ b/apps/app/components/cycles/cycles-view.tsx

@@ -0,0 +1,249 @@

+import React, { useEffect } from "react";

+import dynamic from "next/dynamic";

+// headless ui

+import { Tab } from "@headlessui/react";

+// hooks

+import useLocalStorage from "hooks/use-local-storage";

+// components

+import {

+ ActiveCycleDetails,

+ CompletedCyclesListProps,

+ AllCyclesBoard,

+ AllCyclesList,

+ CyclesListGanttChartView,

+} from "components/cycles";

+// ui

+import { EmptyState, Loader } from "components/ui";

+// icons

+import { ChartBarIcon, ListBulletIcon, Squares2X2Icon } from "@heroicons/react/24/outline";

+import emptyCycle from "public/empty-state/empty-cycle.svg";

+// types

+import {

+ SelectCycleType,

+ ICycle,

+ CurrentAndUpcomingCyclesResponse,

+ DraftCyclesResponse,

+} from "types";

+

+type Props = {

+ setSelectedCycle: React.Dispatch>;

+ setCreateUpdateCycleModal: React.Dispatch>;

+ cyclesCompleteList: ICycle[] | undefined;

+ currentAndUpcomingCycles: CurrentAndUpcomingCyclesResponse | undefined;

+ draftCycles: DraftCyclesResponse | undefined;

+};

+

+export const CyclesView: React.FC = ({

+ setSelectedCycle,

+ setCreateUpdateCycleModal,

+ cyclesCompleteList,

+ currentAndUpcomingCycles,

+ draftCycles,

+}) => {

+ const { storedValue: cycleTab, setValue: setCycleTab } = useLocalStorage("cycleTab", "All");

+ const { storedValue: cyclesView, setValue: setCyclesView } = useLocalStorage("cycleView", "list");

+

+ const currentTabValue = (tab: string | null) => {

+ switch (tab) {

+ case "All":

+ return 0;

+ case "Active":

+ return 1;

+ case "Upcoming":

+ return 2;

+ case "Completed":

+ return 3;

+ case "Drafts":

+ return 4;

+ default:

+ return 0;

+ }

+ };

+

+ const CompletedCycles = dynamic(

+ () => import("components/cycles").then((a) => a.CompletedCycles),

+ {

+ ssr: false,

+ loading: () => (

+

+

+

+ ),

+ }

+ );

+

+ return (

+ <>

+

+

Cycles

+

+

+

+

+

+

+

+ {["All", "Active", "Upcoming", "Completed", "Drafts"].map((tab, index) => {

+ if (

+ cyclesView === "gantt_chart" &&

+ (tab === "Active" || tab === "Drafts" || tab === "Completed")

+ )

+ return null;

+

+ return (

+

+ `rounded-3xl border px-6 py-1 outline-none ${

+ selected

+ ? "border-brand-accent bg-brand-accent text-white font-medium"

+ : "border-brand-base bg-brand-base hover:bg-brand-surface-2"

+ }`

+ }

+ >

+ {tab}

+

+ );

+ })}

+

+

+

+

+ {cyclesView === "list" && (

+

+ )}

+ {cyclesView === "board" && (

+

+ )}

+ {cyclesView === "gantt_chart" && (

+

+ )}

+

+ {cyclesView !== "gantt_chart" && (

+

+ {currentAndUpcomingCycles?.current_cycle?.[0] ? (

+

+ ) : (

+

+ )}

+

+ )}

+

+ {cyclesView === "list" && (

+

+ )}

+ {cyclesView === "board" && (

+

+ )}

+ {cyclesView === "gantt_chart" && (

+

+ )}

+

+

+

+

+ {cyclesView !== "gantt_chart" && (

+

+ {cyclesView === "list" && (

+

+ )}

+ {cyclesView === "board" && (

+

+ )}

+

+ )}

+

+

+

+ );

+};

diff --git a/apps/app/components/cycles/delete-cycle-modal.tsx b/apps/app/components/cycles/delete-cycle-modal.tsx

index 58b670f3a..136a9b847 100644

--- a/apps/app/components/cycles/delete-cycle-modal.tsx

+++ b/apps/app/components/cycles/delete-cycle-modal.tsx

@@ -29,6 +29,7 @@ type TConfirmCycleDeletionProps = {

import {

CYCLE_COMPLETE_LIST,

CYCLE_CURRENT_AND_UPCOMING_LIST,

+ CYCLE_DETAILS,

CYCLE_DRAFT_LIST,

CYCLE_LIST,

} from "constants/fetch-keys";

@@ -114,6 +115,14 @@ export const DeleteCycleModal: React.FC = ({

false

);

}

+ mutate(

+ CYCLE_DETAILS(projectId as string),

+ (prevData: any) => {

+ if (!prevData) return;

+ return prevData.filter((cycle: any) => cycle.id !== data?.id);

+ },

+ false

+ );

handleClose();

setToastAlert({

diff --git a/apps/app/components/cycles/gantt-chart.tsx b/apps/app/components/cycles/gantt-chart.tsx

new file mode 100644

index 000000000..44abc392b

--- /dev/null

+++ b/apps/app/components/cycles/gantt-chart.tsx

@@ -0,0 +1,83 @@

+import { FC } from "react";

+// next imports

+import Link from "next/link";

+import { useRouter } from "next/router";

+// components

+import { GanttChartRoot } from "components/gantt-chart";

+// hooks

+import useGanttChartCycleIssues from "hooks/gantt-chart/cycle-issues-view";

+

+type Props = {};

+

+export const CycleIssuesGanttChartView: FC = ({}) => {

+ const router = useRouter();

+ const { workspaceSlug, projectId, cycleId } = router.query;

+

+ const { ganttIssues, mutateGanttIssues } = useGanttChartCycleIssues(

+ workspaceSlug as string,

+ projectId as string,

+ cycleId as string

+ );

+

+ // rendering issues on gantt sidebar

+ const GanttSidebarBlockView = ({ data }: any) => (

+

+ );

+

+ // rendering issues on gantt card

+ const GanttBlockView = ({ data }: any) => (

+

+

+

+

+ {data?.name}

+

+

+

+ );

+

+ // handle gantt issue start date and target date

+ const handleUpdateDates = async (data: any) => {

+ const payload = {

+ id: data?.id,

+ start_date: data?.start_date,

+ target_date: data?.target_date,

+ };

+

+ console.log("payload", payload);

+ };

+

+ const blockFormat = (blocks: any) =>

+ blocks && blocks.length > 0

+ ? blocks.map((_block: any) => {

+ if (_block?.start_date && _block.target_date) console.log("_block", _block);

+ return {

+ start_date: new Date(_block.created_at),

+ target_date: new Date(_block.updated_at),

+ data: _block,

+ };

+ })

+ : [];

+

+ return (

+

+ }

+ blockRender={(data: any) => }

+ />

+

+ );

+};

diff --git a/apps/app/components/cycles/index.ts b/apps/app/components/cycles/index.ts

index 558801598..3151529c5 100644

--- a/apps/app/components/cycles/index.ts

+++ b/apps/app/components/cycles/index.ts

@@ -1,11 +1,18 @@

-export * from "./completed-cycles-list";

-export * from "./cycles-list";

+export * from "./active-cycle-details";

+export * from "./cycles-view";

+export * from "./completed-cycles";

+export * from "./cycles-list-gantt-chart";

+export * from "./all-cycles-board";

+export * from "./all-cycles-list";

export * from "./delete-cycle-modal";

export * from "./form";

+export * from "./gantt-chart";

export * from "./modal";

export * from "./select";

export * from "./sidebar";

+export * from "./single-cycle-list";

export * from "./single-cycle-card";

export * from "./empty-cycle";

export * from "./transfer-issues-modal";

-export * from "./transfer-issues";

\ No newline at end of file

+export * from "./transfer-issues";

+export * from "./active-cycle-stats";

diff --git a/apps/app/components/cycles/modal.tsx b/apps/app/components/cycles/modal.tsx

index 968c6f46e..b3baacd65 100644

--- a/apps/app/components/cycles/modal.tsx

+++ b/apps/app/components/cycles/modal.tsx

@@ -20,6 +20,7 @@ import type { ICycle } from "types";

import {

CYCLE_COMPLETE_LIST,

CYCLE_CURRENT_AND_UPCOMING_LIST,

+ CYCLE_DETAILS,

CYCLE_DRAFT_LIST,

CYCLE_INCOMPLETE_LIST,

} from "constants/fetch-keys";

@@ -58,6 +59,7 @@ export const CreateUpdateCycleModal: React.FC = ({

mutate(CYCLE_DRAFT_LIST(projectId as string));

}

mutate(CYCLE_INCOMPLETE_LIST(projectId as string));

+ mutate(CYCLE_DETAILS(projectId as string));

handleClose();

setToastAlert({

@@ -92,6 +94,7 @@ export const CreateUpdateCycleModal: React.FC = ({

default:

mutate(CYCLE_DRAFT_LIST(projectId as string));

}

+ mutate(CYCLE_DETAILS(projectId as string));

if (

getDateRangeStatus(data?.start_date, data?.end_date) !=

getDateRangeStatus(res.start_date, res.end_date)

@@ -157,14 +160,10 @@ export const CreateUpdateCycleModal: React.FC = ({

title: "Error!",

message: "Unable to create cycle in past date. Please enter a valid date.",

});

+ handleClose();

return;

}

- const isDateValid = await dateChecker({

- start_date: payload.start_date,

- end_date: payload.end_date,

- });

-

if (data?.start_date && data?.end_date) {

const isDateValidForExistingCycle = await dateChecker({

start_date: payload.start_date,

@@ -182,10 +181,16 @@ export const CreateUpdateCycleModal: React.FC = ({

message:

"You have a cycle already on the given dates, if you want to create your draft cycle you can do that by removing dates",

});

+ handleClose();

return;

}

}

+ const isDateValid = await dateChecker({

+ start_date: payload.start_date,

+ end_date: payload.end_date,

+ });

+

if (isDateValid) {

if (data) {

await updateCycle(data.id, payload);

@@ -199,6 +204,7 @@ export const CreateUpdateCycleModal: React.FC = ({

message:

"You have a cycle already on the given dates, if you want to create your draft cycle you can do that by removing dates",

});

+ handleClose();

}

} else {

if (data) {

diff --git a/apps/app/components/cycles/sidebar.tsx b/apps/app/components/cycles/sidebar.tsx

index 91e592e7d..494383fc4 100644

--- a/apps/app/components/cycles/sidebar.tsx

+++ b/apps/app/components/cycles/sidebar.tsx

@@ -8,7 +8,6 @@ import useSWR, { mutate } from "swr";

// react-hook-form

import { useForm } from "react-hook-form";

import { Disclosure, Popover, Transition } from "@headlessui/react";

-import DatePicker from "react-datepicker";

// icons

import {

CalendarDaysIcon,

@@ -21,7 +20,7 @@ import {

LinkIcon,

} from "@heroicons/react/24/outline";

// ui

-import { CustomMenu, Loader, ProgressBar } from "components/ui";

+import { CustomMenu, CustomRangeDatePicker, Loader, ProgressBar } from "components/ui";

// hooks

import useToast from "hooks/use-toast";

// services

@@ -34,7 +33,11 @@ import { DeleteCycleModal } from "components/cycles";

import { ExclamationIcon } from "components/icons";

// helpers

import { capitalizeFirstLetter, copyTextToClipboard } from "helpers/string.helper";

-import { isDateRangeValid, renderDateFormat, renderShortDate } from "helpers/date-time.helper";

+import {

+ isDateGreaterThanToday,

+ renderDateFormat,

+ renderShortDate,

+} from "helpers/date-time.helper";

// types

import { ICycle, IIssue } from "types";

// fetch-keys

@@ -77,7 +80,7 @@ export const CycleDetailsSidebar: React.FC = ({

: null

);

- const { reset, watch } = useForm({

+ const { setValue, reset, watch } = useForm({

defaultValues,

});

@@ -122,6 +125,166 @@ export const CycleDetailsSidebar: React.FC = ({

});

}, [cycle, reset]);

+ const dateChecker = async (payload: any) => {

+ try {

+ const res = await cyclesService.cycleDateCheck(

+ workspaceSlug as string,

+ projectId as string,

+ payload

+ );

+ return res.status;

+ } catch (err) {

+ return false;

+ }

+ };

+

+ const handleStartDateChange = async (date: string) => {

+ setValue("start_date", date);

+ if (

+ watch("start_date") &&

+ watch("end_date") &&

+ watch("start_date") !== "" &&

+ watch("end_date") &&

+ watch("start_date") !== ""

+ ) {

+ if (!isDateGreaterThanToday(`${watch("end_date")}`)) {

+ setToastAlert({

+ type: "error",

+ title: "Error!",

+ message: "Unable to create cycle in past date. Please enter a valid date.",

+ });

+ return;

+ }

+

+ if (cycle?.start_date && cycle?.end_date) {

+ const isDateValidForExistingCycle = await dateChecker({

+ start_date: `${watch("start_date")}`,

+ end_date: `${watch("end_date")}`,

+ cycle_id: cycle.id,

+ });

+

+ if (isDateValidForExistingCycle) {

+ await submitChanges({

+ start_date: renderDateFormat(`${watch("start_date")}`),

+ end_date: renderDateFormat(`${watch("end_date")}`),

+ });

+ setToastAlert({

+ type: "success",

+ title: "Success!",

+ message: "Cycle updated successfully.",

+ });

+ return;

+ } else {

+ setToastAlert({

+ type: "error",

+ title: "Error!",

+ message:

+ "You have a cycle already on the given dates, if you want to create your draft cycle you can do that by removing dates",

+ });

+ return;

+ }

+ }

+

+ const isDateValid = await dateChecker({

+ start_date: `${watch("start_date")}`,

+ end_date: `${watch("end_date")}`,

+ });

+

+ if (isDateValid) {

+ submitChanges({

+ start_date: renderDateFormat(`${watch("start_date")}`),

+ end_date: renderDateFormat(`${watch("end_date")}`),

+ });

+ setToastAlert({

+ type: "success",

+ title: "Success!",

+ message: "Cycle updated successfully.",

+ });

+ } else {

+ setToastAlert({

+ type: "error",

+ title: "Error!",

+ message:

+ "You have a cycle already on the given dates, if you want to create your draft cycle you can do that by removing dates",

+ });

+ }

+ }

+ };

+

+ const handleEndDateChange = async (date: string) => {

+ setValue("end_date", date);

+

+ if (

+ watch("start_date") &&

+ watch("end_date") &&

+ watch("start_date") !== "" &&

+ watch("end_date") &&

+ watch("start_date") !== ""

+ ) {

+ if (!isDateGreaterThanToday(`${watch("end_date")}`)) {

+ setToastAlert({

+ type: "error",

+ title: "Error!",

+ message: "Unable to create cycle in past date. Please enter a valid date.",

+ });

+ return;

+ }

+

+ if (cycle?.start_date && cycle?.end_date) {

+ const isDateValidForExistingCycle = await dateChecker({

+ start_date: `${watch("start_date")}`,

+ end_date: `${watch("end_date")}`,

+ cycle_id: cycle.id,

+ });

+

+ if (isDateValidForExistingCycle) {

+ await submitChanges({

+ start_date: renderDateFormat(`${watch("start_date")}`),

+ end_date: renderDateFormat(`${watch("end_date")}`),

+ });

+ setToastAlert({

+ type: "success",

+ title: "Success!",

+ message: "Cycle updated successfully.",

+ });

+ return;

+ } else {

+ setToastAlert({

+ type: "error",

+ title: "Error!",

+ message:

+ "You have a cycle already on the given dates, if you want to create your draft cycle you can do that by removing dates",

+ });

+ return;

+ }

+ }

+

+ const isDateValid = await dateChecker({

+ start_date: `${watch("start_date")}`,

+ end_date: `${watch("end_date")}`,

+ });

+

+ if (isDateValid) {

+ submitChanges({

+ start_date: renderDateFormat(`${watch("start_date")}`),

+ end_date: renderDateFormat(`${watch("end_date")}`),

+ });

+ setToastAlert({

+ type: "success",

+ title: "Success!",

+ message: "Cycle updated successfully.",

+ });

+ } else {

+ setToastAlert({

+ type: "error",

+ title: "Error!",

+ message:

+ "You have a cycle already on the given dates, if you want to create your draft cycle you can do that by removing dates",

+ });

+ }

+ }

+ };

+

const isStartValid = new Date(`${cycle?.start_date}`) <= new Date();

const isEndValid = new Date(`${cycle?.end_date}`) >= new Date(`${cycle?.start_date}`);

@@ -133,9 +296,9 @@ export const CycleDetailsSidebar: React.FC = ({

<>

+

+

+

+

+

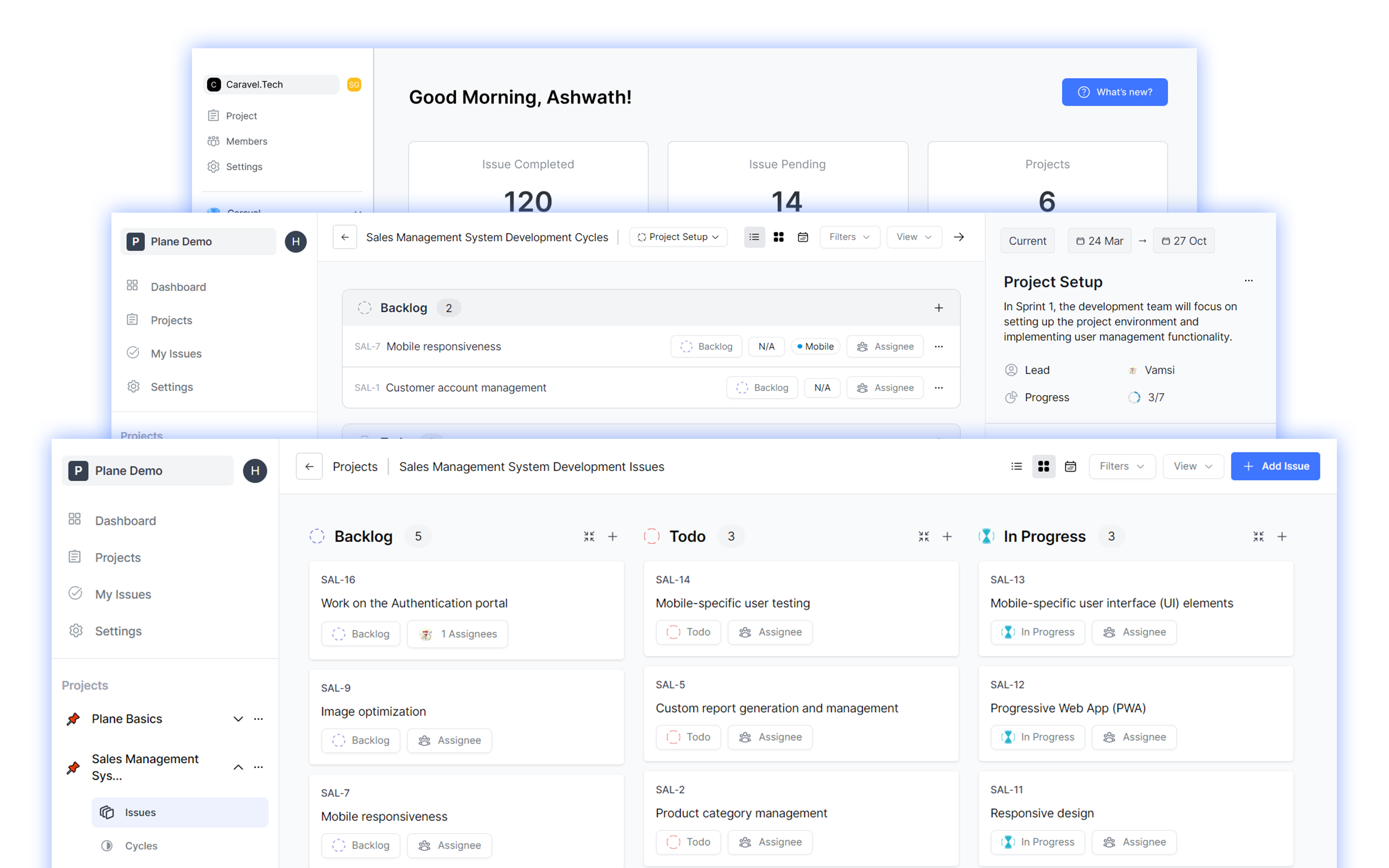

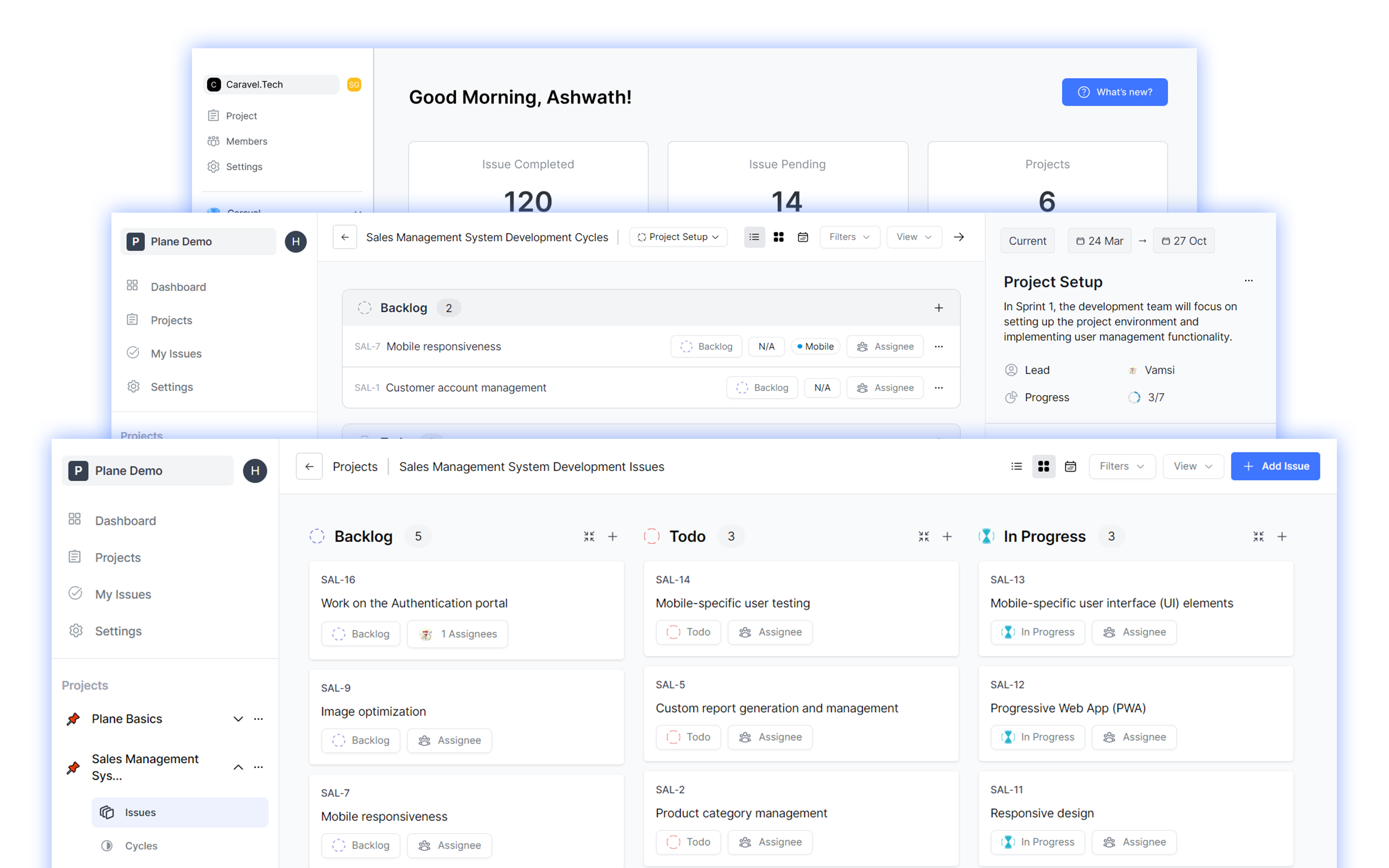

+  @@ -85,41 +92,62 @@ docker compose up -d

## 📸 Screenshots

+

@@ -85,41 +92,62 @@ docker compose up -d

## 📸 Screenshots

+ -

- -

- +

+

+

+

+

+  @@ -85,41 +92,62 @@ docker compose up -d

## 📸 Screenshots

+

@@ -85,41 +92,62 @@ docker compose up -d

## 📸 Screenshots

+ -

- +

+

+

+